People around the world, especially those with injuries or limited mobility, experience sit to stand (STS) difficulties in their everyday lives. Detailing just some of these challenges is an August 12, 2022 New York Times article, Embarrassing, Uncomfortable and Risky: What Flying is Like for Passengers Who Use Wheelchairs. The author described the discomfort, danger, and embarrassment that wheelchair users experience on airlines. Travelers with limited mobility often need two attendants to support them while sitting and standing in the wheelchair and moving in and out of the airline seat.

But travelers aren’t alone. The World Health Organization (WHO) estimates that more than one billion people live with disabilities, and 75 million require wheelchairs. Mobility challenges often create uncomfortable, dangerous, and embarrassing experiences for individuals at hospitals, nursing homes, assisted living communities, physical-therapy facilities, and even private homes. Limited mobility also prevents individuals from exercising to support their physical health.

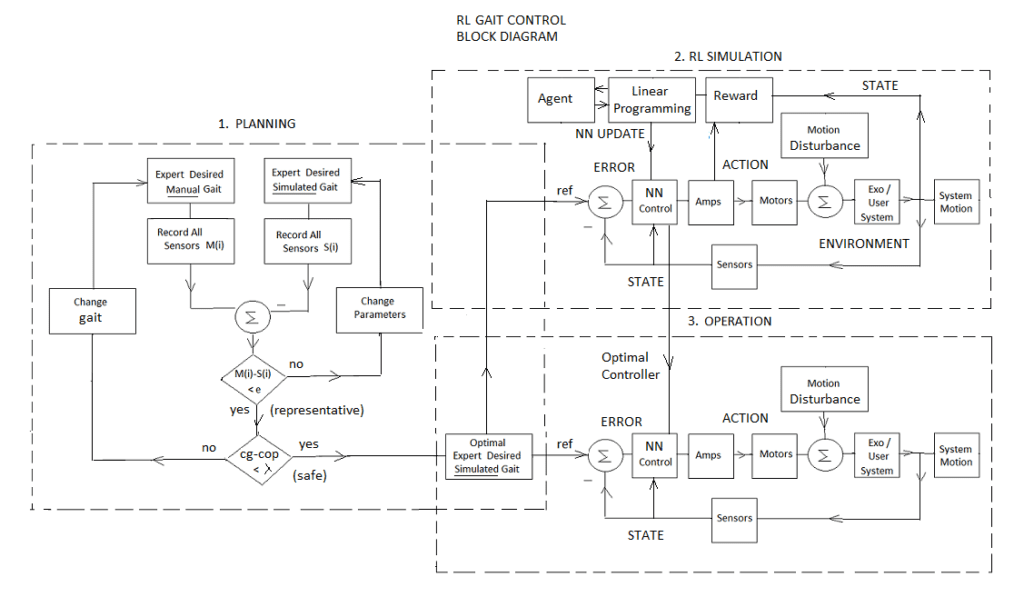

Powered STS solutions are evolving to improve the global community’s comfort, motion support, quality of life, and longevity. The next generation of robotic chairs includes a new artificial intelligence/machine learning (AI/ML) approach, dynamic programming, single-axis exoskeleton, sensors, and reinforcement learning (RL) motion control. The goal is to use AI/ML to reach optimal cost/performance solutions for nonlinear, random STS environments and create building blocks for higher-level AI/ML exoskeletons.

State-of-the-art powered STS solutions

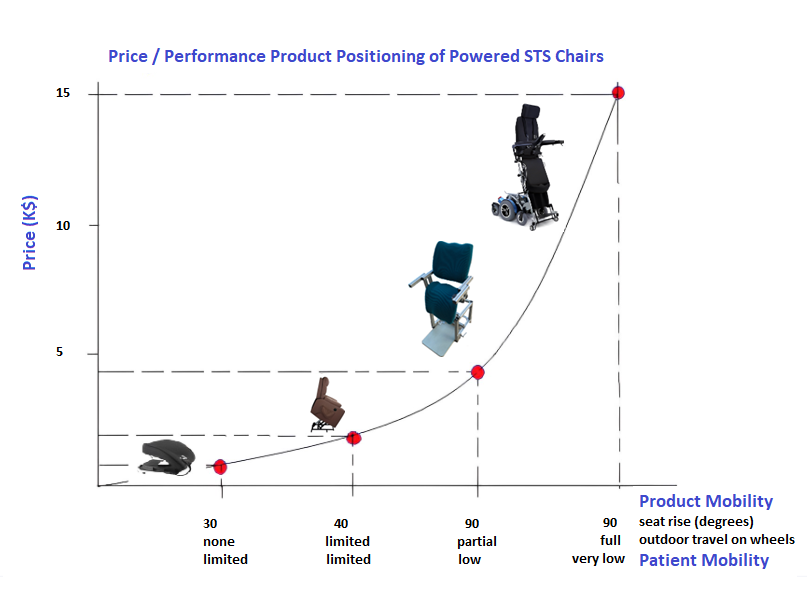

Powered STS systems help people with limited mobility and those who assist them in moving safely, comfortably, effectively, and independently. Their designs include kinematic linkages and actuators powered by motion controllers that automate the chairs to reduce or eliminate user muscle and joint torques required for motion. And their price points generally align with their mobile assistance abilities.

The Karman Standing Wheelchair is a state-of-the-art solution with advanced functionality and adjustability features for comfort and safety. It weighs 180 lb, costs about $15,000, and provides a standing angle nearly perpendicular to the floor; the latter requires minimal knee and hip moments to reach a fully erect position.

Lift chairs are commonly used as powered comfort chairs. They cost a few hundred dollars and are available in various reclining options. The reclining angle of the lift chair seat is limited to a partial stand position, which requires some user knee and hip moments to reach a complete standing posture.

Powered seats, made by Carex, are portable lifting seats for use on sofas and armchairs. They cost less than $200 depending on their features. A typical seat has a limited lift angle that provides up to 70% assist lifting, according to Carex.

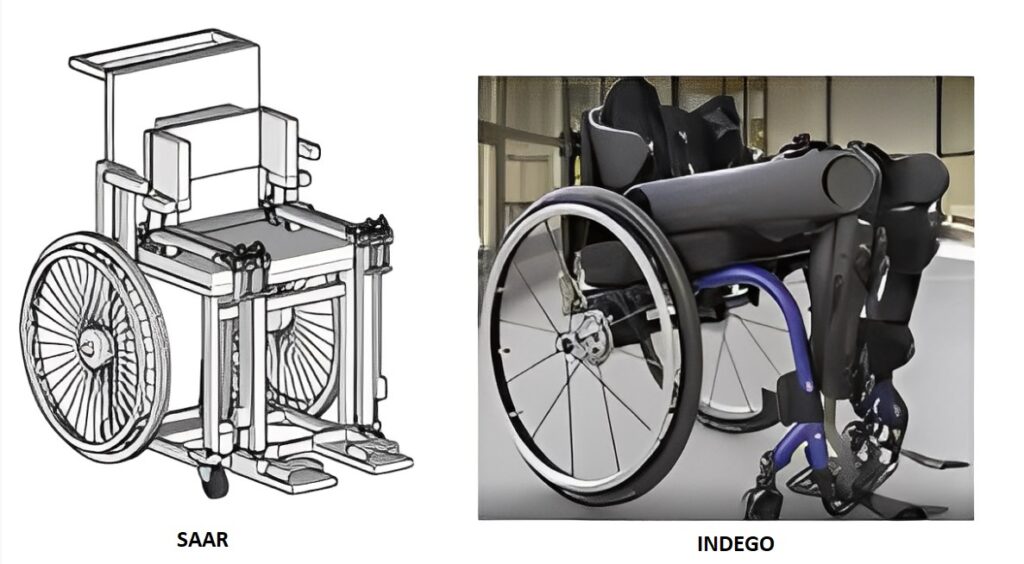

RoboChair, made by SAAR, is a next-generation powered STS system prototype (currently not for sale). It monitors user posture for comfort and safety and includes AI/ML options for various applications, such as work, dining, resting, physical therapy, and routine exercises. This chair costs a few thousand dollars and is intended to support people in stationary or wheelchair versions. It’s designed for comfort as well as minimal knee and hip moments — and has wheels for easy maneuverability.

Robotic chairs with AI/ML

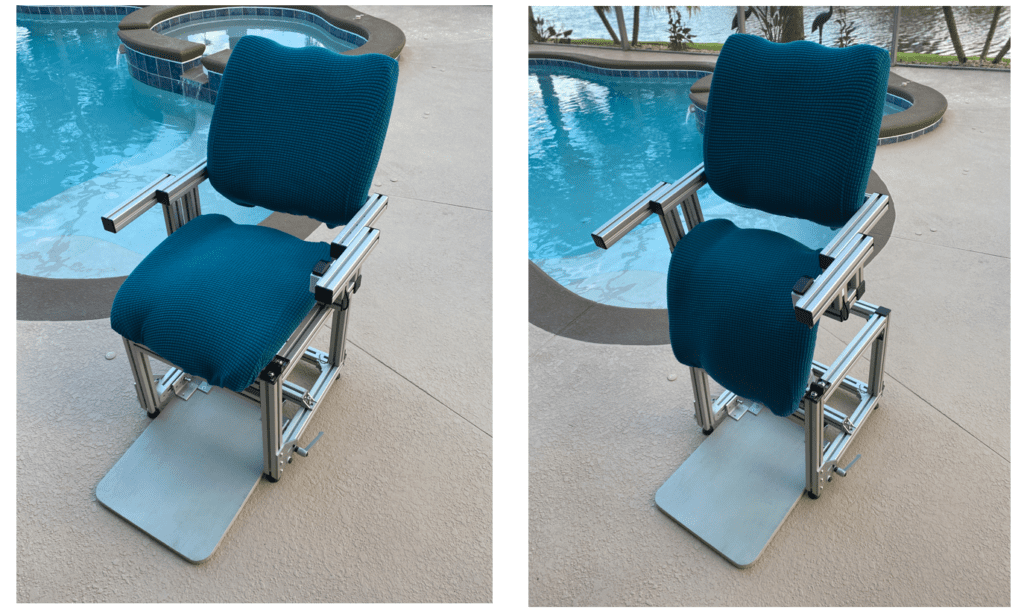

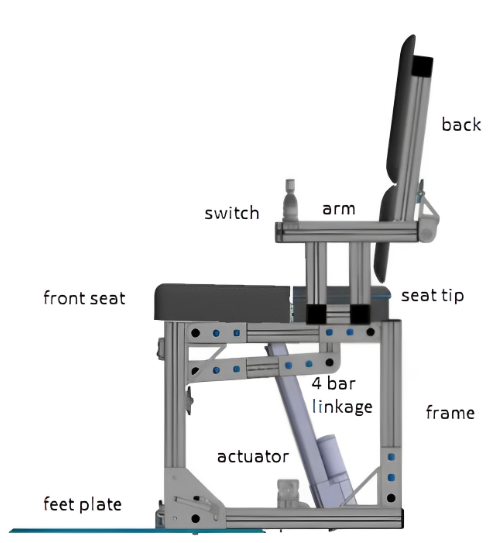

SAAR’s robotic chair has an aluminum base frame that supports a linear actuator, feet plate, seat, and back support. The seat comprises two parts: a front seat for thigh support and a back seat tip for buttocks support. The back seat tip remains parallel to the floor for safety and comfort during STS motion. The ballscrew actuator is fixed to the frame at the stationary bottom end and hinged to the front seat at the moving upper end.

The robotic chair includes wheels for easy transfer and motorized folding for compact storage and efficient transport. For improved performance, the chair also includes AI/ML and sensors that send signals such as force, position, and velocity to the controller in real time during STS motion. These signals give the controller information on the STS system’s stability, safety, and comfort and can result in actions such as actuator motor torque and sending the user warning signals. The controller can also compare information to performance data sets for people with similar weight, height, age, and medical limitations and output exercise rating feedback.

Next-generation robotic chairs should combine design simplicity with AI/ML readiness at various complexity levels. Their simplest version can be shaped like a chair with a single motorized axis and an adjustable link to yield up and down motion with a click of a switch. This solution can help people who experience discomfort and pain and rely on hand support, a forward posture lean, or assistive human support while sitting and standing. Getting to and from the chair may not be the problem, so simple assistive STS motion support may increase independence and reduce pain.

In its higher-level version, a robotic chair can be an assistive physical therapist. The chair can support people during repetitive STS activities at various start and stop angles while activating other limbs as part of their prescribed or recommended daily exercises.

A robotic chair can advance further and become the next-generation solution for people who cannot execute STS motion without human support. In this version, AI/ML technology monitors the user’s posture, controls the chair motion, and provides feedback signals to change the user’s posture for better stability and comfort during STS motion. This robotic chair can be an exoskeleton with one degree of freedom at the knee joint. Its most advanced version can be part of a complex exoskeleton system that provides STS motion and assistive robotic solutions for short travel gaits, extended travel wheelchair riding, climbing stairs, and resting.

Reinforcement learning motion control

The motion of complex automated systems, such as exoskeletons and robotic chairs, can be controlled in different ways. Bang-bang (on/off) is considered the most efficient, while PID is the most common. There are also Kalman filters for random environments and fuzzy logic for nonlinear processes. Reinforcement learning (RL) motion control — part of AI/ML technology — is a relatively new approach that could be used for highly complex, nonlinear, random environments, which are expected in human-robot interactions.

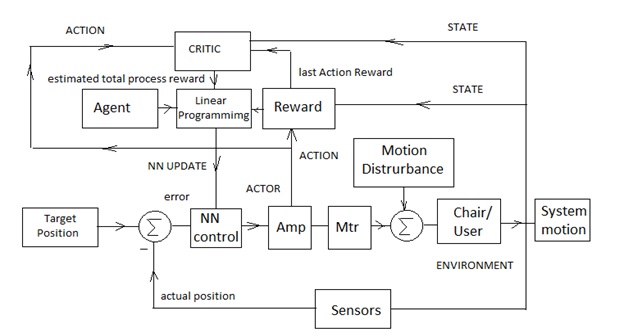

RL Agents learn to optimize hundreds of neural network (NN) parameters using linear programming in a simulated environment. The program includes a custom Reward function for desired performance, which is determined by system modeling and validation. RL Agents perform the tuning process and employ Critic and Actor estimators of the total expected Reward for the process. Dynamic programming and the Bellman equation are used to optimize performance by minimizing the difference between the Critic and Actor total Reward estimates, which are functions of system States and Actions. States can be measured by force, position, or velocity sensors. Actions may include controller commands, such as motor torque or alarm signal.

Unlike PID, which implies proportional, integral, and derivatives of the error between actual and desired motion, the resulting NN control parameters may not be intuitively meaningful. Control engineers commonly use tools like Tensor Flow, Python, and MATLAB to optimize the NN parameters, then deploy the resulting NN Policy, which converts system States to Actions, to the real system’s motion controller. Since the simulation model may not be an exact representation of the real environment, engineers use trial-and-error with different RL Agents, Reward functions, and programming tools. The final resulting performance, which may have been difficult or impossible to visualize before the simulation, may make engineering sense.

Simulation testing

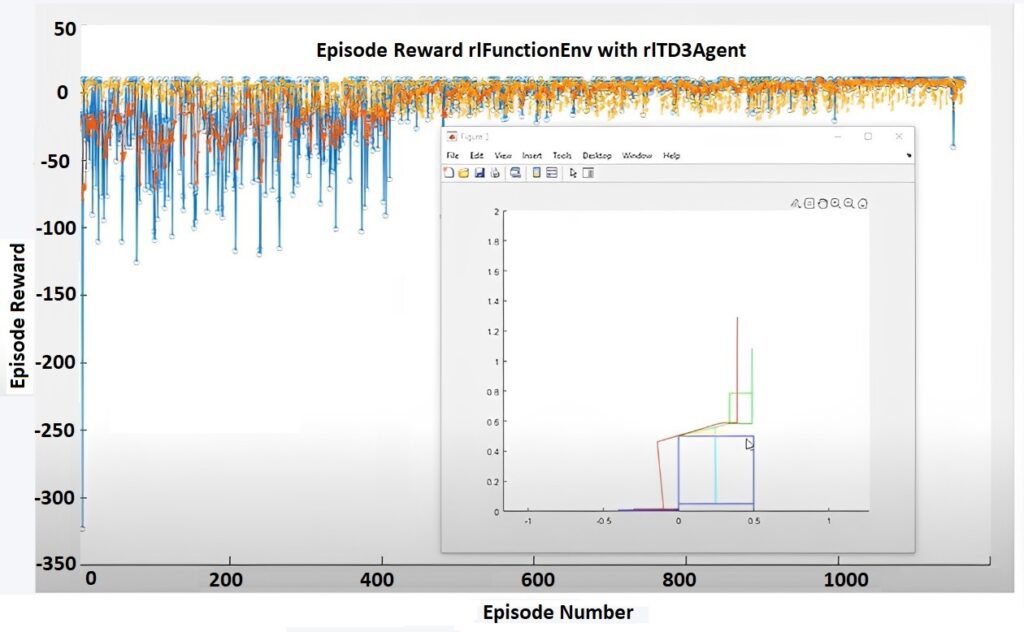

In an STS chair simulation, an Actor was responsible for the Action of driving the chair actuator. The Actor received State variables and position error input and used the NN control parameters to output an Action, a control signal to the actuator. The actuator acted on the chair along with nonlinear (shock) and random (unexpected reaction) disturbances from the user’s behavior. As a result, the chair seat accelerated and yielded a new system motion State, including force, position, and velocity. The new State, which also implied a new user posture, entered a Reward function and together with the Action yielded a Reward. A high Reward was given for a safer user posture, a lower Reward was given in a warning zone, and a high penalty was given for unsafe slip conditions.

The calculated Reward was then fed to a Critic, which estimated the total expected Reward of the entire STS process for its NN calculations. The RL Agent took the estimated total rewards as a result of the Actor action, compared it to the total reward estimate from the Critic, and applied dynamic programming with a Bellman equation to change the actor NN control parameters and minimize the difference between the Critic and Actor’s total reward estimates. After a few hundred episode iterations in MATLAB, the Actor’s total Reward estimate converged to the Critic’s total Reward estimate. It implied an optimal posture, shown as an animated stick figure, where the user’s torso was vertical, and the user’s buttocks were positioned as back as possible on the seat tip.

POC system testing

Proof of concept (POC) testing is an integral part of RL product development that starts with safety and stability testing and results in conceptual design changes. For example, the initial robotic chair model was unstable at certain seat positions, so the development team added a stabilizing feet plate to eliminate chair rocking.

Additional testing helped determine an optimal posture for stable STS motion, as the user tended to slip at about 45°. Adding fabric with a high coefficient of friction increased the slip angle but was not enough to prevent slipping. More testing proved that keeping the torso vertical to the ground and the buttocks as back as possible on the seat tip yielded STS stability. However, the user felt discomfort while sitting on the very narrow back corner of the seat. Therefore, the development team oriented the seat tip to provide comfortable buttocks support throughout the STS process.

POC tests also validated the optimal sitting posture and compared it to the simulated RL test results. A person with limited mobility tested the optimal STS posture on a regular chair and showed the opposite — buttocks toward the chair front, torso bent forward, hands on the chair seat for support. The POC tests of the robotic chair resulted in the same conclusions as the RL process simulation. The safest and most comfortable posture for STS motion in the robotic chair was keeping the torso vertical with the buttocks positioned as close to the back seat tip as possible.

This example shows how AI/ML features may provide a competitive edge in product cost/performance and manage conflicting performance objectives such as maximum safety at the highest speed. However, the requirements for including AI/ML capabilities in product development may imply higher level engineering analysis, simulations, validations, and deployment, compared with traditional processes.

This article was contributed by Boaz Eidelberg, Ph.D., at SAAR.

Leave a Reply

You must be logged in to post a comment.